Machine Learning Benchmarks Compare Energy Consumption

Machine learning (ML) is becoming increasingly popular in many applications, ranging from miniature Internet of Things (IoT) devices to massive data centers. For various reasons like real-time expectations, must-have offline operation, battery-saving purposes, or even security and privacy the most optimal is to run ML algorithms and data processing right at the edge where the data is being generated. These low-power IoT devices could be embedded microcontrollers, wireless SoCs, or intelligence integrated into a sensor device.

To achieve this, semiconductor product manufacturers need small, low-power processors or microcontrollers enable to run the necessary ML software in an efficient way, sometimes supported with HW acceleration built into the chip. Evaluating the performance of a particular device is not straightforward, as its effectiveness in an ML application is more important than raw number-crunching.

To help, MLCommons™, an open engineering consortium, has developed three benchmarking suites to compare ML offerings from different vendors. MLCommons focuses on collaborative engineering work to benefit the machine learning industry through benchmarks, metrics, public datasets, and best practices.

These benchmark suites, called MLPerf™, measure the performance of ML systems at inference when applying a trained ML model to new data. The benchmarks also optionally measure the energy used to complete the inference task. As the benchmarks are open source and peer-reviewed, they provide an objective, impartial test of performance and energy efficiency.

Of the three benchmarks available from MLCommons, Silicon Labs has submitted a solution for the MLPerf Tiny suite. MLPerf Tiny is aimed at the smallest devices with low power consumption, typically used in deeply embedded applications such as the IoT or intelligent sensing.

The Benchmarking Tests

MLCommons recently conducted a round of its MLPerf Tiny 1.0 benchmarking, and 16 different systems were submitted for analysis by different vendors.

Customers typically look for more than raw performance in these ultra-low-power ML systems. They also focus on power consumption and often prioritize the energy a system uses to perform a task as the most important metric, especially in the case of aiming to create battery-powered applications.

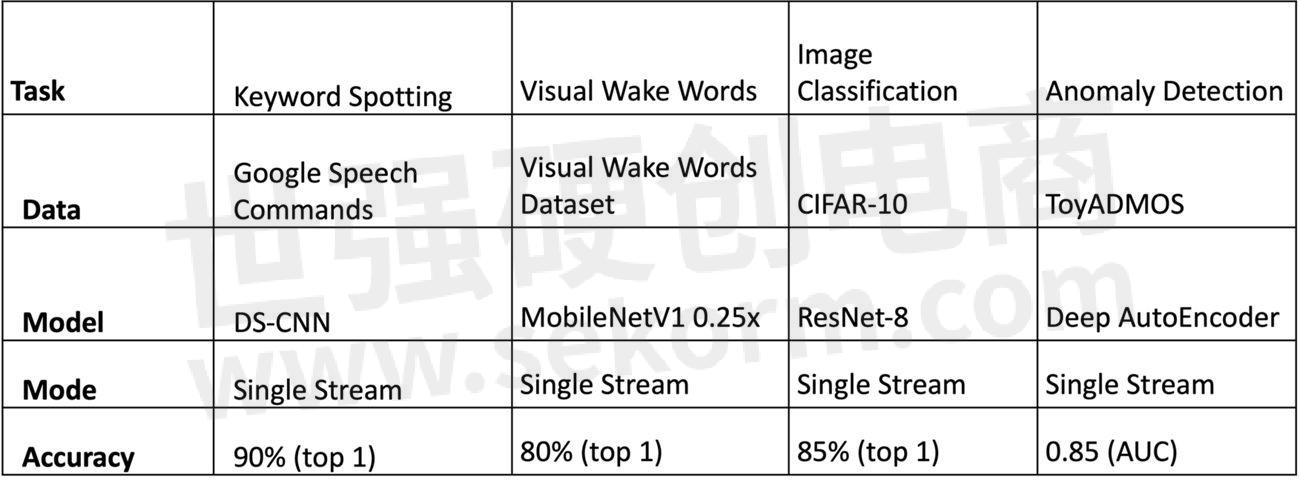

For representative testing, the benchmarks included ML models for five different scenarios (see Table 1): keyword spotting, visual wake words, image classification, and anomaly detection. Each scenario uses a specific dataset and ML model to simulate real-world applications. The benchmark suites are designed to test the hardware, software, and machine learning models used. In each case, the testing measured the latency of a single inference to show how quickly the task was completed. The test could also optionally measure the energy used.

The Silicon Labs System Benchmarked

Silicon Labs submitted its EFR32MG24 Multiprotocol Wireless System on Chip (SoC) for benchmarking. The SoC includes one Arm Cortex®-M33 core (78MHz, 1.5MB of Flash / 256kB of RAM) and a Silicon Labs AI/ML accelerator subsystem. It supports multiple 2.4GHz RF protocols, including Bluetooth Low Energy (BLE), Bluetooth mesh, Matter, OpenThread, and Zigbee. It’s ideal for mesh IoT wireless applications, such as smart homes, lighting, and building automation. This compact development platform provides a simple, time-saving path for AI/ ML development.

The SoC was running TensorFlowLite for Microcontrollers software, which enables ML inference models to run on microcontrollers and other low-power devices with small memories. It used optimized neural network kernels from the CMSIS-NN library in the Silicon Labs Gecko Software Development Kit (SDK).

The Results

The MLPerf™ Tiny v1.0 benchmark results highlight the efficiency of the on-chip accelerator in Silicon Labs’ EFR32MG24 platform. Inferencing calculations are offloaded from the main CPU, allowing it to execute other tasks or even be placed in sleep mode to provide further power savings.

It’s essential to meet the growing needs of AI/ML-enhanced low-power wireless IoT solutions, allowing devices to stay in the field for up to ten years on a single coin-cell battery. These latest results show an improvement of 1.5 to 2x increase in speed and a 40-80% reduction in energy consumption compared to a selection of other benchmarked AI/ML models from the previous Tiny v0.7 results.

As ML becomes more widely used in embedded IoT applications, this ability to run inferences with low power consumption is essential – and will enable product designers to apply ML in new use cases and different devices.

- +1 Like

- Add to Favorites

Recommend

- Wirepas Mesh 2.4GHz Firmware v5.4 and Silicon Labs EFR32MG24: A Powerful Combination for IoT Applications

- Silicon Labs Multiprotocol Wireless SoCs EFR32MG24 Team up with Wirepas to Expand Industrial Mesh Network Solutions

- Silicon Labs Brings AI and Machine Learning to the Edge with Matter-Ready Platform

- Silicon Labs Simplifies IoT Development with Simplicity Studio 5

- Wirepas and the Silicon Labs’ EFR32FG23 Sub-GHz SoC are taking on India’s Smart Metering Challenge

- Unlocking Wireless Home Security Capabilities with Wyze Lock Bolt smart door lock that is based on Silicon Labs’EFR32MG21 2.4GHz Wireless SoC

- Silicon Labs Strengthens Isolated Gate Driver Portfolio

- Introducing the EFM8BB50: A New Addition to SILICON LABS 8-bit MCU Family

This document is provided by Sekorm Platform for VIP exclusive service. The copyright is owned by Sekorm. Without authorization, any medias, websites or individual are not allowed to reprint. When authorizing the reprint, the link of www.sekorm.com must be indicated.