NetOps CI: Validating BGP-based DDoS Protection with Ixia-c and Containerlab

Network Operation teams across multiple industries are embracing Continuous Integration (CI) principles to keep service availability high and maintain confidence while rolling out changes to network infrastructure. There is a thriving ecosystem of tools under the umbrella of "NetOps 2.0 Automation" that helps build "smart" CI pipelines.

Keysight Network Test products, traditionally known as Ixia, play a key role in QA and regression testing at many network equipment vendors, as well as large network operators – contributing to a higher quality of network hardware and software they release or deploy. In 2020, we at Keysight embarked on a challenge to make network testing more aligned with the needs of network operations:

◆ faster qualification of new NOS (network operating system) images,

◆ repeatable pre-deployment change validation,

◆ integration with network management and automation tools used in the field.

This blog post is the first one in a series that would showcase how a new generation of Keysight Network Test products can be applied to validate network design patterns commonly used across many ISP, data centers, campuses, or backbone networks.

USE CASE: REMOTE TRIGGERED BLACK HOLE – DDOS PROTECTION 101

There are not many problems Internet-facing services can face, worse than being under a volumetric DDoS attack – and not being equipped to react. Among many methods available for organizations to combat these types of attacks, Remote Triggered Black Hole (RTBH) is one of the oldest and most universally available networks that have their ISP connections running over BGP routing. The main goal of the technique is to limit the blast radius of the attack and prevent DDoS traffic from impacting other services that are running via the same ISP circuit as the target of the attack. Any network engineer should be ready to leverage this mechanism to counter a DDoS attack.

Being a service provided by an ISP, RTBH relies on the correct initiation by ISP's customers. How can an ISP guide their customers to properly implement their side of the service, and help them get it always ready to be used? Welcome to the concept of a shareable RTBH lab with a CI pipeline! Something that an ISP can make available to all of their customers to validate every change on their routers, as well as for training new engineers and conducting NOC drills. Sharing such a lab is super easy thanks to the latest generation of container-based network emulation tools, among which is Containerlab.

NETWORK EMULATION WITH CONTAINER LAB

As their website puts it, "Containerlab provides a CLI for orchestrating and managing container-based networking labs. It starts the containers, builds virtual wiring between them to create lab topologies of users choice and manages labs lifecycle." While network emulation is not a new concept and projects like GNS-3 and Eve-NG are quite mature, the big difference Containerlab style emulation brings to the industry is an emphasis on Network-as-a-Code. With Containerlab, it is extremely easy to publish a network lab via a Git repository so that other network engineers can repeat the same setup.

With the help of Containerlab, an ISP that would like to provide an example of their RTBH implementation in action can come up with a typical customer equipment (CE) setup, and then, if needed, a customer can adjust the setup by replacing the CE node with a different NOS container from a vendor they are using. In fact, in this case, I'm going to take a Containerlab-based RTBH lab example that uses open-source FRRouting software and sFlow-RT controller and modify it to fit our needs. But first, let's review what would be the key elements of the RTBH lab.

RTBH LAB REQUIREMENTS

For the purposes of validating volumetric DDoS protection with RTBH, here are the minimum components for the lab:

◆ Provider (PE) and Customer (CE) routers interconnected via an IP circuit and peered over eBGP,

◆ Victim IP address in the customer network – it will be a target of a DDoS attack. With help of RTBH, all traffic to the Victim will be dropped on the Provider side, without reaching the CE router,

◆ Bystander IP address in the customer network – proper RTBH design will keep the Bystander reachable during DDoS mitigation,

◆ Users – a set of IP addresses behind PE connecting to both Victim and Bystander,

◆ Attackers – a set of IP addresses behind PE originating a volumetric attack on the Victim.

The lab should reproduce the following flow of the events:

1. At first, low-rate traffic from the Users as well as the Attackers should be able to reach both the Victim and Bystander IPs,

2. At some moment, high-rate traffic should start coming from the Attackers toward the Victim, significantly increasing the load on the PE-CE circuit,

3. To mitigate the attack, an RTBH announcement for the Victim's IP address should be initiated from the CE via the eBGP,

4. Following the announcement, any traffic to the Victim should be dropped by the PE, decreasing the load on the PE-CE circuit,

5. While the RTBH mitigation is in place, traffic from the Users should be able to reach the Bystander, but not the Victim.

With that implemented, the lab would allow validating:

◆ A proper way for a customer to initiate an RTBH announcement,

◆ CE router configuration to support RTBH,

◆ Overall RTBH design works as intended by dropping traffic to the Victim on the provider side and not impacting other customer services.

APPLYING CI TO THE RTBH LAB

If we look at our starting point with the DDoS lab by sFlow-RT, it does a great job of showcasing how it is possible to automatically trigger an RTBH announcement by a sFlow-RT collector when incoming traffic crosses a threshold. But what is missing to make this example aligned with NetOps 2.0 automation, is a Continuous Integration workflow that would be able to execute the lab scenario automatically every time when:

◆ A new version of CE router software is released,

◆ A change to the CE router configuration is scheduled,

◆ The sFlow-RT collector version or configuration is changing,

◆ A provider has updated either the s/w version or configuration on their side, and published the shared lab with the new changes,

◆ Significant growth of normal traffic between PE and CE,

◆ And probably many more.

The part in the original lab that prevents such automation is the way both good and bad traffic is generated. This part is manual and uses the hping3 command utility that lacks CI-friendly APIs and reporting capabilities. The original lab also lacks validation that traffic to other services like Bystander hosts, would not be impacted by the DDoS mitigation.

To demonstrate how to extend the original lab with CI workflow, I'm going to replace generic Linux containers that represent the Attackers as well as the Victim with a free version of Keysight Ixia-c Traffic Engine. In the new lab version published on the Open Traffic Generator Examples repository, the Ixia-c node will have one interface attached to the PE, and another to the CE. This way, when it would generate traffic from Attackers, as well as Users, Ixia-c will be able to report if each type of traffic is passing through the lab, or being dropped. To define and control traffic, as well as collect metrics, Ixia-c exposes Open Traffic Generator (OTG) data model using OpenAPI standard. The OTG model provides an extensive set of controls that is easy to use from a CI program written in programming languages favored for network automation, like Python or Go. This enables execution of the lab workflow in a repeatable fashion any time any component of the lab is changing to validate RTBH capabilities are not impacted.

EXPRESSING THE TEST TRAFFIC IN OTG MODEL

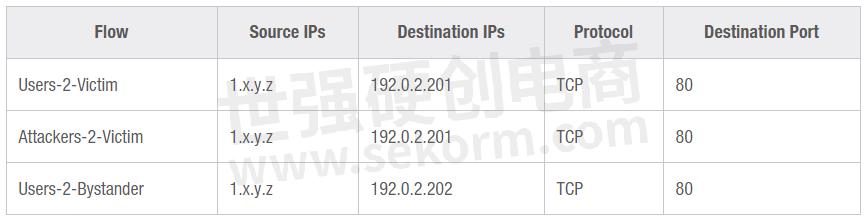

Based on the event sequence we would like to run through the lab, the following traffic flows could be defined:

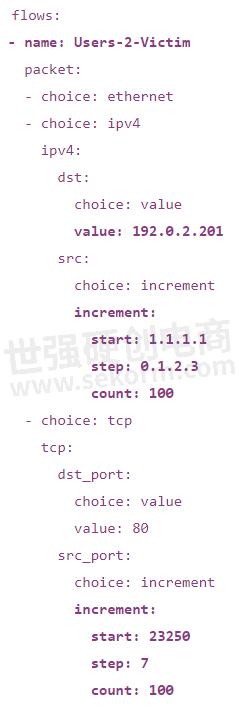

To create a variety of traffic sources, the OTG model has an increment capability that can be applied to various elements. In our case, it has been applied to "randomize" source IP addresses as well ports using the YAML structure below. We used the same start and increment values for all flows while choosing destinations depending on if it should be the Victim or the Bystander.

For our test, we only need packets in one direction: from Users or Attackers to a Victim or Bystander. Other use cases might require bi-directional flows. The OTG model and Ixia-c engine support both types.

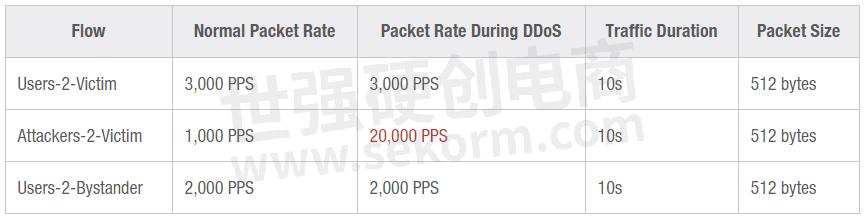

Besides ranges for IP addresses and ports, the OTG model also allows you to specify at which rate to send the packets from each flow, with what sizes, and with how many total packets. A combination of the packet rate and count determines for how long the flow would be transmitted. For our lab, we are going to use flow characteristics from the table below, with the rate of traffic from the Attackers increasing 20x during the DDoS phase.

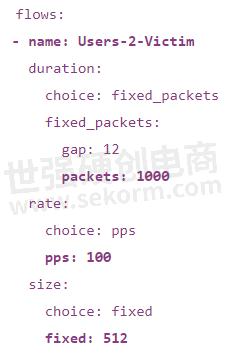

In OTG YAML, an example of traffic rate for a flow with 1000 packets with a rate of 100 PPS looks like this:

Here, I'm intentionally using the values that don't match the targets set in the table. In the lab CI Go program we're going to load the OTG model from the YAML file and then modify traffic rate values as we see fit for the normal traffic, as well as for the DDoS phase, before applying the OTG model to the Ixia-c Traffic Engine.

RTBH DDOS MITIGATION WITH SFLOW-RT

The sFlow-RT controller in the lab will be configured with a threshold of 10,000 PPS to trigger IP Flood attack detection. CE-router is configured to report traffic rates via sFlow to the controller, as well as to receive BGP announcements from the controller. In response to the IP Flood trigger, the action of the controller would be to initiate an RTBH announcement for the IP address of the Victim with the goal to drop all the packets toward that IP address at the ISP level. In our simplified lab, it would be the PE router dropping the packets.

As the CI program executes, it goes though the normal traffic phase followed by DDoS. During the normal phase, the test succeeds if all the flows finish without a packet loss. But for the DDoS phase, the CI test is looking for excessive packet loss on flows "Attacker-2-Victim" and "Users-2-Victim", while "Users-2-Bystander" shall experience no packet loss.

Below is a visual representation of the flow behavior reported by the sFlow-RT dashboard. To make this image more pronounced, the duration of the normal traffic phase was extended to 3 minutes, instead of the 10 seconds described above. Such a long duration won't be necessary for the typical CI pipeline. As the flow metrics are taken from the CE router, you can see how the RTBH announcement triggered by a spike of traffic blocks all packets destined to the Victim (192.0.2.201) on the upstream PE router, thus preventing saturation of the PE-CE circuit. Traffic to the Bystander (192.0.2.202) keeps running as-is.

PUTTING EVERYTHING TOGETHER

Let's summarize our CI setup. We have

◆ Containerlab CLI tool to create and manage an emulated network topology using Docker, from a topology definitions YAML file.

◆ The topology is described in the lab YAML file and is composed of PE and CE routers running FRRouting software, a sFlow-RT Controller, as well an Ixia-c Traffic Engine, all of which are distributed as Docker images.

◆ Each node in the topology boots with a configuration that comes as part of the lab repository, with a set of files for each PE, CE, and Controller node.

◆ Ixia-c initial traffic model is defined in an Open Traffic Generator YAML file.

◆ Finally, to execute CI tests, a Go test package is provided with the lab.

To run the lab tests, the Go program should be executed in the lab directory as a go test, by the CI pipeline, or manually. The program would load the OTG traffic model from the YAML file, apply any necessary modifications to the traffic flows – for example, update packet rate or count for each flow, and then it would connect to the Ixia-c container, push the OTG mode to it for execution. After that point, the program would monitor flow metrics as reported by Ixia-c to determine when all the packets were transmitted through the topology, and how many of them were received.

Below, you can see an example of the program output. There are two test phases, first – with normal traffic rates, when all three traffic flows are fully complete, and second – with Attackers-2-Victim flow sending packets above the predefined DDoS rate threshold of 10,000 PPS. You can visually see how during the second phase, how traffic on both flows destined to the Victim stops shortly after the beginning of the phase – as an indication of these packets being dropped by the PE in response to the RTBH announcement. For the second phase the test pass criteria we are using is no packet loss on Users-2-Bystander flow, and extensive packet loss on both flows towards the Victim.

SUMMARY

As you could see, describing network validation logic in the Open Traffic Generator model, and executing it with Ixia-c Traffic Engine, all within a Containerlab managed containerized network lab, enables Network Automation 2.0 approach to the cases when you need to run traffic scenarios as part of your network CI pipeline.

- +1 Like

- Add to Favorites

Recommend

- Keysight‘s Test Software IxNetwork and AresONE Help Build The 400GE Future in Brazil

- Keysight‘s Open RAN Test Solutions Selected by Auden to Validate Open Radio Access Network Solutions

- Keysight Announces First and Highest Density 400GE Network Cybersecurity Test Platform with an 8x400GE QSFP-DD Test Interface

- Use Keysight‘s CyPerf 2.0 to Test Today‘s Zero Trust Networks

- Keysight Delivers First Integrated Test Package for Time Sensitive Networking Based on Avnu Alliance Test Plans

- Keysight Delivers New Modular Network Cybersecurity Test Platform

- Keysight Expands Novus Portfolio with Compact Network Test Solution for Automotive and Industrial IoT

- Three Coherent Products Honored with Lightwave Innovation Awards as Top Solutions in the Optical Networking Industry

This document is provided by Sekorm Platform for VIP exclusive service. The copyright is owned by Sekorm. Without authorization, any medias, websites or individual are not allowed to reprint. When authorizing the reprint, the link of www.sekorm.com must be indicated.